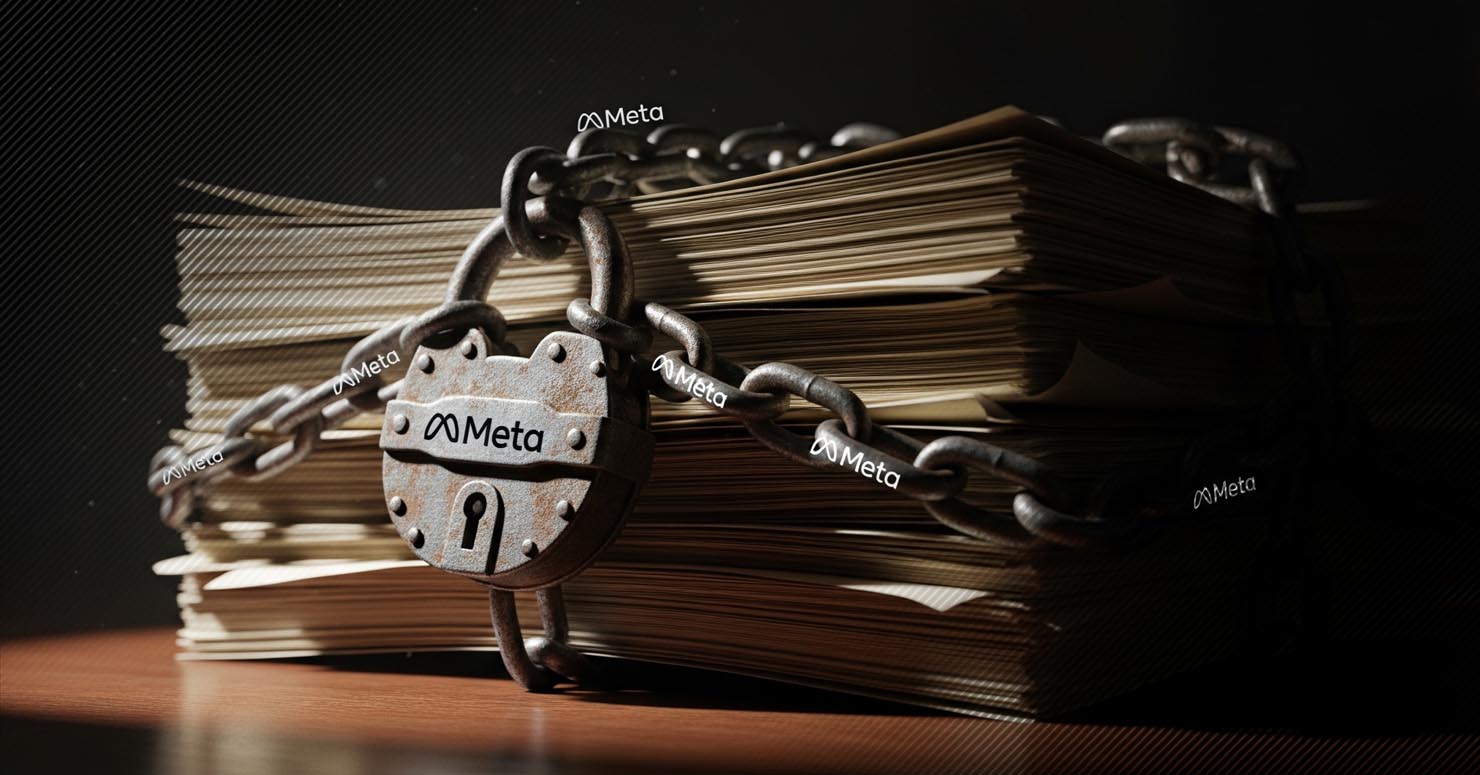

In what could be a watershed moment for Big Tech accountability, four former Meta employees have come forward as whistleblowers, alleging in a new lawsuit that the company deliberately suppressed its own internal research. The damning claim: the research showed that its products, including Instagram and Facebook, are harmful to the mental and physical health of children and teens. The allegation that Meta suppressed children’s safety research is not just a PR crisis; it’s a profound accusation of a calculated decision to prioritize profit over the well-being of its youngest users.

This lawsuit, filed in California, is more than just another legal challenge. It’s an insider’s account of an alleged cover-up, painting a picture of a company fully aware of the harm its algorithms can cause, but choosing to hide the evidence. As we’ve seen with other platforms where AI chatbots have failed in mental health crises, the intersection of technology and youth mental health is a minefield, and these new allegations suggest a conscious disregard for the risks.

This report by Basma Imam dives into the explosive claims, examines the potential motivations behind such a cover-up, and analyzes what this means for the future of platform regulation.

The Bombshell Allegations: What the Whistleblowers Claim

The lawsuit is being brought by four former employees who worked on Meta’s well-being and safety teams.

According to the detailed report from TechCrunch, the core of their allegation is that Meta leadership actively and deliberately shelved internal studies that produced inconvenient results.

The key claims include:

- Suppression of Negative Findings: Research that showed a correlation between Instagram use and issues like eating disorders, body dysmorphia, and depression in teens was allegedly buried or its conclusions watered down.

- Ignoring Internal Experts: The whistleblowers claim that their teams of experts repeatedly warned leadership about the addictive nature of the platform’s features and their potential for harm, but these warnings were ignored in favor of features that boosted engagement.

- Misleading Public Statements: The lawsuit alleges that while Meta was publicly claiming to be committed to youth safety, it was privately aware that Meta suppressed children’s safety research that contradicted these claims.

This isn’t the first time a whistleblower has sounded the alarm. The new lawsuit builds on the foundations laid by Frances Haugen, whose testimony in 2021 first brought many of these issues to light.

The “Why”: Prioritizing Engagement Over Well-Being

If these allegations are true, they point to a fundamental conflict at the heart of Meta’s business model.

Social media platforms make money by keeping users engaged for as long as possible to serve them more ads.

The whistleblowers’ claims suggest that the features most effective at driving this engagement are also the ones most likely to be harmful to young, developing minds.

The decision to allegedly suppress this research would have been a business decision.

Acknowledging the harm would have forced the company to make changes that could have potentially reduced user engagement, and therefore, revenue.

This is a chilling example of the ethical dilemmas that arise when the primary goal of an AI-driven algorithm is to maximize a single metric (time on site) without regard for the human cost.

A Pattern of Behavior?

This lawsuit does not exist in a vacuum. It arrives at a time when tech companies are facing increasing legal pressure over their impact on youth.

OpenAI is currently facing a wrongful death lawsuit, which prompted the rollout of ChatGPT parental controls as a reactive measure.

The claims that Meta suppressed children’s safety research fit into a broader narrative of tech companies failing to take proactive responsibility for the societal impact of their products.

Critics argue that these companies have known about the potential for harm for years but have consistently chosen to downplay the risks until forced to act by whistleblowers and legal action.

This lawsuit, alleging that Meta suppressed children’s safety research, will add significant fuel to that fire.

Frequently Asked Questions (FAQ)

1. What is the core claim of the new lawsuit against Meta?

Four former employees allege that Meta suppressed children’s safety research that showed its platforms, like Instagram, were harmful to the mental and physical health of young users.

2. Is this the first time Meta has been accused of this?

No. This lawsuit follows the famous 2021 testimony of whistleblower Frances Haugen, who made similar claims and released thousands of internal documents, known as the “Facebook Papers,” to support them.

3. What has been Meta’s response?

Meta has consistently denied these claims, stating that the research is often taken out of context and that the company has invested billions in safety and well-being features for its platforms.

4. What could be the consequences if these allegations are proven true?

If proven true, Meta could face massive financial penalties from regulators and in civil lawsuits. More importantly, it would cause irreparable damage to the company’s public trust and could lead to much stricter government regulation of social media platforms. The evidence that Meta suppressed children’s safety research would be a landmark moment in tech accountability.