For as long as I’ve been online, the internet’s approach to age gates has felt like a running joke. That little checkbox asking, “Are you over 18?” was a barrier that a curious child could bypass with a single, dishonest click. It was security theater, and we all knew it. That’s why, when I heard about YouTube’s new plan, I was intrigued.

The company is rolling out a new system of YouTube AI age estimation to figure out if a user is a teen. The goal is to improve online child safety, but as I look closer, I can’t shake the feeling that this is one of those digital privacy trends that solves one problem by creating a dozen new ones.

So, What’s Actually Changing on YouTube?

Starting in August for a small group of users in the US, YouTube is moving beyond just asking for your birthday. Instead, it’s deploying a machine learning model to guess your age based on a variety of signals.

Here’s how it works: The AI will look at things like the types of videos you search for, the content you watch, and even how long you’ve had your account. If the system infers that you’re under 18, it will automatically apply YouTube minor restrictions, even if you told it you were born in 1980.

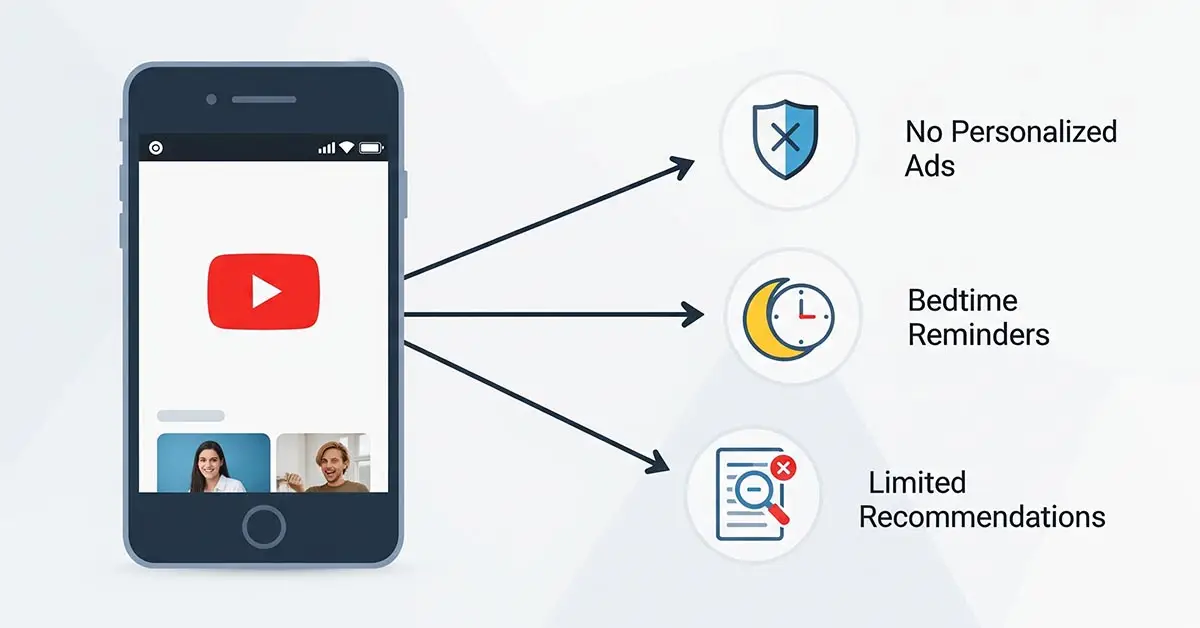

These restrictions aren’t new, but their application will be. For accounts flagged as belonging to teens, YouTube will:

- Disable personalized advertising.

- Turn on digital wellbeing tools like “take a break” and bedtime reminders by default.

- Limit repetitive recommendations for content that could be problematic, like videos about body image.

If the system gets it wrong, and I suddenly find myself being told to go to bed at 10 PM, YouTube says I’ll have the option to verify my age using a credit card or a government ID.

The Tech Behind the Guesswork: How AI Age Verification Works

This move by YouTube isn’t happening in a vacuum. It’s part of a much larger push towards AI age verification across the internet. The technology behind it is both fascinating and a little unsettling.

One of the most common methods is facial age estimation. Companies like Yoti and AuthenticID have developed AI that can estimate a person’s age from a selfie with surprising accuracy. These systems analyze facial features and compare them to millions of other faces to make a guess. According to Yoti, their tech is 99.3% accurate at correctly identifying 13 to 17-year-olds as being under 21.

However, “accurate” doesn’t mean perfect. Studies have shown that these AI models can have biases, often being less accurate when estimating the age of women and people of color. The Electronic Frontier Foundation (EFF), a leading digital rights group, has warned that normalizing face scans for age checks is a slippery slope that could lead to other forms of biometric surveillance.

Why Now? The Global Push for a Safer Internet

So, why is this happening now? The simple answer is regulatory pressure. Governments around the world are tired of the ineffective checkbox and are demanding real action.

The most significant driver of this trend is the UK’s Online Safety Act, a massive piece of legislation that finally came into force in July 2025. Under these new rules, the UK’s media regulator, Ofcom, can levy enormous fines up to 10% of a company’s global turnover on any platform that fails to prevent minors from accessing harmful content. This law has forced major platforms like Reddit, X, and PornHub to implement robust age verification systems for their UK users.

This is a clear example of how regional laws are shaping global tech trends. What starts in one major market often becomes the de facto standard for everyone else, as it’s easier for a global company like YouTube to implement one policy worldwide than to manage dozens of different ones.

My Big Concern: The Privacy Trade-Off

On paper, protecting children is a goal I wholeheartedly support. Ofcom’s own research found that 8% of UK children aged 8-14 had visited a pornographic site in a single month. That’s a problem that needs solving.

But as a user, I’m deeply uncomfortable with the proposed solutions. The idea of my viewing history being fed into an algorithm to determine my age feels invasive. What if I’m researching a topic for an article that happens to be popular with teens? Will I suddenly be locked out of content?

The alternative uploading my government ID to Google is even less appealing. We live in an era of constant data breaches. Creating massive, centralized databases of users’ ages and identities feels like a disaster waiting to happen. As one cyber expert, Jason Nurse, asked, “How can we be confident this data won’t be misused?”.

This is the central tension of the tech trends for 2025: the push for safety and personalization often comes at the direct expense of privacy.

The Unavoidable Flaws and the Future

Beyond my privacy concerns, I also question how effective this will ultimately be. Determined teens have always found ways around digital barriers. In the UK, the moment the Online Safety Act went into effect, searches for VPNs surged by over 700% as users sought to bypass the new checks.

This new system from YouTube is a significant step up from a simple checkbox, but it’s not foolproof. It represents a fundamental shift in how we establish identity online, moving from self-declaration to algorithmic inference. It’s one of the core technologies of 2025 that will redefine our relationship with the platforms we use every day.

Ultimately, this trend forces us to confront a difficult question. We want an internet that is safe for children, but are we willing to create an internet where we must constantly prove who we are to an algorithm? For now, YouTube is treading carefully with a limited rollout. But this feels like the beginning of a much larger conversation about what a truly “safe” internet looks like, and what we’re willing to give up to get there.